Usually organizations use an internal network to prevent unauthorized people from connecting to their private network and by using their own network infrastructure/connectivity they can maintain their desirable level of security for their data. But it would be convenient for users to connect to that private network while they are away from the office’s building, on their own internet connection. To solve that problem, VPN (Virtual Private Network) is used to allow authorized remote access to an organization’s private network.

Working in a fully remote company like Mattermost is, creates the need for employees to use a VPN connection in order to be able to access internal private infrastructure and resources. A vast majority of companies use OpenVPN as a solution to host those VPN connections on their own servers. OpenVPN is a widely used software and protocol which was also our selection to be used as a quick and reliable solution to access our internal infrastructure. After using it a while we needed a better solution in terms of:

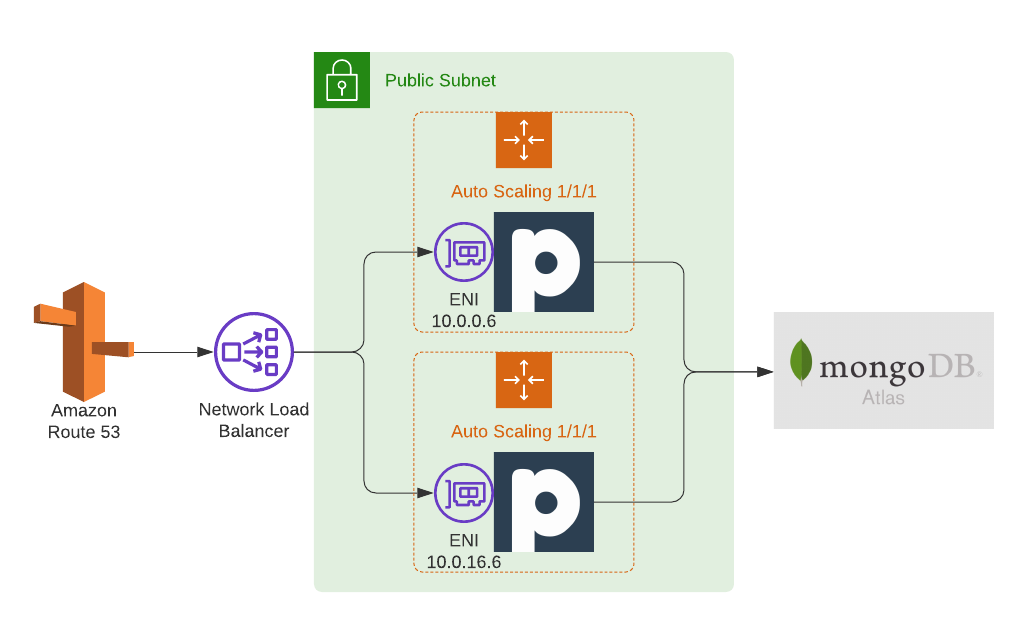

To deploy Pritunl in our infrastructure, we used Terraform. The module we wrote can be found here as an example for how to deploy Pritunl. The infrastructure consists of:

The selection of two ASGs with one instance has been done due to the necessity of having the same Elastic Network Interface (ENI), which results on having the same private and public IPs. This is useful when whitelisting those IPs into the Security Groups that Pritunl-VPN needs to access internally such as our internal GitLab.

This can be achieved by attaching those ENIs as a secondary network interfaces on the instance, check appendix Attach second ENI.

You can manually create two ENIs (we selected the sixth address of each subnet 10.0.0.6 and 10.0.16.6) and attach public IPs to them. Then you can provide the list of the ENI IDs with the variable (list) fixed_eni on Terraform.

Initially, we checked the AWS DocumentDB solution, but it was quite expensive, as it starts from $0.28/hr for one db.r5.large instance.

Next, we deployed two instances and installed/configured a MongoDB cluster, but maintaining and making sure that HA worked effectively was a big overhead.

Thus, we selected to use MongoDB Atlas as it is cheaper (starts also from free tier) and easier to set up.

After the creation of the MongoDB Atlas, we added Pritunl’s public IPs on the whitelist of the Atlas cluster and we connected to the Atlas cluster locally to create a new database in it called pritunl (guide on how-to-connect). Also check Pritunl documentation for MongoDB Atlas.

To set up the MongoDB URI (mongodb+srv://pritunl:password@pritunl-mongodb-xxxxx.mongodb.net/pritunl) use the variable (string) mongodb_uri on Terraform.

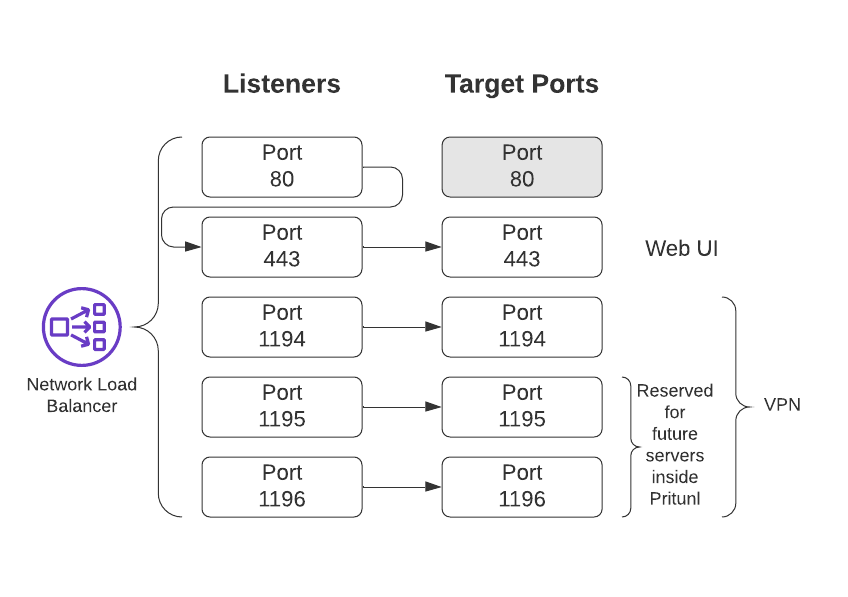

The Network Load Balancer (NLB) which is in front of the instances which has five listeners as shown below. There are three listeners for VPN (on ports 1194, 1195, 1196) that can be used for the servers inside the Pritunl. Currently, we are using only port 1194, so the rest are for future usage.

Pritunl is installed via the userdata. As the instances do not store any configuration items except the MongoDB URI, each instance needs to connect to the MongoDB to obtain the required configuration and then the instance joins the Pritunl cluster.

As per Pritunl documentation:

sudo pritunl default-password to get the default username and password.As per Pritunl documentation:

Navigate to Users and create a new organization, e.g. devs_org

Navigate to Servers and create a new server:

devs_server1194 ()or any other port that you have setup for VPN access, check NLB10.0.0.2, 8.8.8.8 where 10.0.0.2 is the DNS resolution of the subnet. The second IP of each subnet is used for DNS resolution and our VPC where Pritunl is running has peering with all the other VPCs, so it is able to resolve all the names inside our network.Remove the 0.0.0.0/0 route from the devs_server.

Select Attach Organization to attach devs_org with devs_server.

Select Attach host to attach the two hosts (instances).

Select Add Route (ensure that the server points to devs_server).

DNS Server:

Start the server with Start Server button

To enable Onelogin, Pritunl Enterprise (with subscription) is needed, otherwise the configuration won’t display in the Settings. Then, you will need to set up a new app inside OneLogin (admin access is needed) and you will need to paste that information inside Pritunl:

OneLogin1234567https://your-company.onelogin.com/trust/saml2/http-redirect/sso/xxxx-xxxx-xxxx-xxxxhttps://app.onelogin.com/saml/metadata/xxxx-xxxx-xxxx-xxxxBelow is the bash script to attach a second ENI for Ubuntu 18.04 as per AWS documentation.

That script can be used widely for other purposes, as well.

# ----- Add fixed Network Interface -----

printf "\n### Installing AWS CLI ###\n"

apt install awscli -y

printf "\n### Attaching ENI to instance ###\n"

INSTANCEID=$(curl http://169.254.169.254/latest/meta-data/instance-id)

MACS=$(curl http://169.254.169.254/latest/meta-data/network/interfaces/macs/ | head -n1)

SUBNETID=$(curl "http://169.254.169.254/latest/meta-data/network/interfaces/macs/$MACS/subnet-id")

NETWORKINTERFACEID=$(aws ec2 describe-network-interfaces --filters Name=tag:OnlyFor,Values=pritunl Name=status,Values=available Name=subnet-id,Values=$SUBNETID --query 'NetworkInterfaces[0].NetworkInterfaceId' --region us-east-1 --output text)

NETWORKINTERFACEIP=$(aws ec2 describe-network-interfaces --network-interface-ids $NETWORKINTERFACEID --region us-east-1 --query 'NetworkInterfaces[].[PrivateIpAddress]' --output text)

aws ec2 attach-network-interface --network-interface-id $NETWORKINTERFACEID --instance-id $INSTANCEID --device-index 1 --region us-east-1

printf "\n### Configuring instance to use secondary ENI ###\n"

SUFFIXDEFAULTIP=$(echo $NETWORKINTERFACEIP | sed 's/\.[^.]*$//')

cat <<EOF > /etc/netplan/51-eth1.yaml

network:

version: 2

renderer: networkd

ethernets:

eth1:

addresses:

- $NETWORKINTERFACEIP/20

dhcp4: no

routes:

- to: 0.0.0.0/0

via: $SUFFIXDEFAULTIP.1 # Default gateway

table: 1000

- to: $NETWORKINTERFACEIP

via: 0.0.0.0

scope: link

table: 1000

routing-policy:

- from: $NETWORKINTERFACEIP

table: 1000

EOF

netplan --debug apply

In order to invoke the Pritunl API, we had to make some changes on the python code that exists here as below:

import requests, time, uuid, hmac, hashlib, base64

BASE_URL = 'https://localhost'

API_TOKEN = 'p7g444S3IZ5wmFvmzWmx14qACXdzQ25b'

API_SECRET = 'OpS9fjxkPI3DclkdKDDr6mqYVd0DJh4i'

def auth_request(method, path, headers=None, data=None):

auth_timestamp = str(int(time.time()))

auth_nonce = uuid.uuid4().hex

auth_string = '&'.join([API_TOKEN, auth_timestamp, auth_nonce,

method.upper(), path])

auth_string_bytes = bytes(auth_string, 'utf-8')

api_secret_bytes = bytes(API_SECRET, 'utf-8')

auth_signature = base64.b64encode(hmac.new(

api_secret_bytes, auth_string_bytes, hashlib.sha256).digest())

auth_headers = {

'Auth-Token': API_TOKEN,

'Auth-Timestamp': auth_timestamp,

'Auth-Nonce': auth_nonce,

'Auth-Signature': auth_signature,

}

if headers:

auth_headers.update(headers)

return getattr(requests, method.lower())(

BASE_URL + path,

headers=auth_headers,

data=data,

)